前言

结构

我们选堆叠结构

不过因为我环境里没有 LB 组件,因此选用 haproxy 来代替,又因为要为 haproxy 做高可用,因此为 haproxy 再外套一层 keepalived。

如果在阿里云构建,可以认为 LB 是健壮的。

机器

机器系统都是 centos7,

三个节点,均为控制平面节点,同时也是Node节点,并利用 keepalived + haproxy 进行 控制平面组件:apiserver 高可用

| hostname | ip | type |

|---|---|---|

| k8sapi | 10.200.16.100 | keepalived vip |

| k8s01 | 10.200.16.101 | master keepalived(主) haproxy |

| k8s02 | 10.200.16.102 | master keepalived(备) haproxy |

| k8s03 | 10.200.16.103 | master |

请务必确保内网可以通过表格里的 hostname 解析到对应的 ip

请务必将系统的 hostname 改为上述表里的 hostname

数据走向:

client->keepalived(vip:8443)->haproxy(vip:8443)-> all:6443

所有节点

时间同步

yum install chrony -y

sed -i '1a server cn.pool.ntp.org prefer iburst' /etc/chrony.conf

systemctl restart chronyd

systemctl enable chronyd

chronyc activity

系统配置

# 加载模块

modprobe overlay

modprobe br_netfilter

# 添加配置

cat > /etc/sysctl.d/99-kubernetes-cri.conf <<EOF

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

# 配置生效

sysctl --system

# 清空防火墙

systemctl stop firewalld.service iptables.service

systemctl disable firewalld.service

systemctl disable iptables.service;

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

# 关闭selinux

setenforce 0

sed -i 's@^\(SELINUX=\).*@\1disabled@' /etc/sysconfig/selinux

sed -i 's@^\(SELINUX=\).*@\1disabled@' /etc/selinux/config

# 关闭 swap,kubelet 1.18 要求.如果你fstab也有,请一并注释

swapoff -a

# 安装 ipvs

yum install ipvsadm -y

ipvsadm --clear

cat> /etc/sysconfig/modules/ipvs.modules << 'EOF'

ipvs_mods_dir="/usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs"

for i in $(ls $ipvs_mods_dir | grep -o "^[^.]*"); do

/sbin/modinfo -F filename $i &> /dev/null

if [ $? -eq 0 ]; then

/sbin/modprobe $i

fi

done

EOF

chmod +x /etc/sysconfig/modules/ipvs.modules

/etc/sysconfig/modules/ipvs.modules

一份额外的内核参数优化

/etc/sysctl.d/99-sysctl.conf

### kernel

# 关闭内核组合快捷键

kernel.sysrq = 0

# 内核消息队列

kernel.msgmnb = 65536

kernel.msgmax = 65536

# 定义 core 文件名

kernel.core_uses_pid = 1

# 定义 core 文件存放路径

kernel.core_pattern = /corefile/core-%e-%p-%t"

# 系统级别上限, 即整个系统所有进程单位时间可打开的文件描述符数量

fs.file-max = 6553500

### tcp/ip

# 开启转发

net.ipv4.ip_forward = 1

# 保持反向路由回溯是关闭的,默认也是关闭

net.ipv4.conf.default.rp_filter = 0

net.ipv4.conf.default.accept_source_route = 0

#

net.ipv4.tcp_window_scaling = 1

# 针对外网访问, 开启有选择应答,便于客户端仅发送丢失报文,从而提高网络接收性能,但会增加CPU消耗

net.ipv4.tcp_sack = 1

# 三次握手请求频次

net.ipv4.tcp_syn_retries = 5

# 放弃回应一个TCP请求之前,需要尝试多少次

net.ipv4.tcp_retries1 = 3

# 三次握手应答频次

net.ipv4.tcp_synack_retries = 2

# 三次握手完毕, 没有数据沟通的情况下, 空连接存活时间

net.ipv4.tcp_keepalive_time = 60

# 探测消息发送次数

net.ipv4.tcp_keepalive_probes = 3

# 探测消息发送间隔时间

net.ipv4.tcp_keepalive_intvl = 15

net.ipv4.tcp_retries2 = 5

net.ipv4.tcp_fin_timeout = 5

# 尽量缓存syn,然而服务器压力过大的时候,并没有啥软用

net.ipv4.tcp_syncookies = 1

# 在每个网络接口接收数据包的速率比内核处理这些包的速率快时,允许送到队列的数据包的最大数目.放大10倍

net.core.netdev_max_backlog = 10240

# 对于还未获得对方确认的连接请求,可保存在队列中的最大数目.放大20倍

net.ipv4.tcp_max_syn_backlog = 10240

# 定义了系统中每一个端口最大的监听队列的长度.放大20倍

net.core.somaxconn=10240

# 开启时间戳

net.ipv4.tcp_timestamps=1

# 仅当服务器作为客户端的时候有效,必须在开启时间戳的前提下

net.ipv4.tcp_tw_reuse = 1

#最大timewait数

net.ipv4.tcp_max_tw_buckets = 20000

net.ipv4.ip_local_port_range = 1024 65500

# 系统处理不属于任何进程的TCP链接

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_max_orphans = 327680

# 开启 iptables 后,链路追踪上限和超时时间, 若没有使用 iptables,则无效

net.netfilter.nf_conntrack_max = 6553500

net.netfilter.nf_conntrack_tcp_timeout_established = 150

# 下列参数如果设置不当,有可能导致系统进不去

#net.ipv4.tcp_mem = 94500000 915000000 927000000

#net.ipv4.tcp_rmem = 4096 87380 4194304

#net.ipv4.tcp_wmem = 4096 16384 4194304

#net.core.wmem_default = 8388608

#net.core.rmem_default = 8388608

#net.core.rmem_max = 16777216

#net.core.wmem_max = 16777216

#kernel.shmmax = 68719476736

#kernel.shmall = 4294967296

安装容器

yum install -y yum-utils \

device-mapper-persistent-data \

lvm2

yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

选择版本

一定要选择所安装的 k8s 版本兼容的最新容器版本

https://kubernetes.io/zh/docs/setup/production-environment/container-runtimes/#docker

yum list docker-ce --showduplicates | sort -r

===

docker-ce.x86_64 3:20.10.7-3.el7 docker-ce-stable

docker-ce.x86_64 3:20.10.6-3.el7 docker-ce-stable

docker-ce.x86_64 3:20.10.5-3.el7 docker-ce-stable

docker-ce.x86_64 3:20.10.4-3.el7 docker-ce-stable

docker-ce.x86_64 3:20.10.3-3.el7 docker-ce-stable

docker-ce.x86_64 3:20.10.2-3.el7 docker-ce-stable

docker-ce.x86_64 3:20.10.1-3.el7 docker-ce-stable

#################### 选择好所要安装的版本 ####################

docker_version=20.10.7 # 例如选择20.10.7

安装并启动

# 安装兼容k8s的docker版本

yum install -y docker-ce-${docker_version} docker-ce-cli-${docker_version}

#sed -i '/ExecStart=/a ExecStartPort=/usr/sbin/iptables -P FORWARD ACCEPT' /usr/lib/systemd/system/docker.service;

mkdir -p /etc/docker;

cat > /etc/docker/daemon.json <<EOF

{

"data-root": "/export/docker-data-root",

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}

EOF

systemctl daemon-reload

systemctl restart docker

systemctl enable docker

安装 kubelet kubeadm kubectl

阿里巴巴镜像点:

https://developer.aliyun.com/mirror/kubernetes

google 官方安装文档 https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/install-kubeadm/

- google 官方源

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-\$basearch

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

EOF

- 阿里源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

- 安装

# 确定 kube* 版本

yum list kube* --showduplicates --disableexcludes=kubernetes

# 选择要安装的版本

full_verions=1.20.8-0

# 安装 kube 管理组件和 kubelet

yum install -y kubelet-${full_verions} kubeadm-${full_verions} kubectl-${full_verions} --disableexcludes=kubernetes

systemctl enable --now kubelet

高可用组件节点

安装负载均衡组件 haproxy 和 高可用组件 keepalived

k8s01,k8s02 执行

- 安装

yum install haproxy keepalived -y

- haproxy配置

cp /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg.default

cat>/etc/haproxy/haproxy.cfg<<EOF

global

log /dev/log local0

log /dev/log local1 notice

daemon

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 1

timeout http-request 10s

timeout queue 20s

timeout connect 5s

timeout client 20s

timeout server 20s

timeout http-keep-alive 10s

timeout check 10s

frontend apiserver

bind *:8443

mode tcp

option tcplog

default_backend apiserver

backend apiserver

option httpchk GET /healthz

http-check expect status 200

mode tcp

option ssl-hello-chk

balance roundrobin

server k8sapivip 10.200.16.101:6443 check

server k8sapivip 10.200.16.102:6443 check

server k8sapivip 10.200.16.103:6443 check

EOF

- keepalived 主配置

两节点配置不一样

cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.default

############## 放在 k8s01 ################

cat>/etc/keepalived/keepalived.conf<<EOF

global_defs {

router_id LVS_DEVEL

}

vrrp_script check_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 3

weight -2

fall 10

rise 2

}

vrrp_instance VI_1 {

state MASTER #

interface ens192 # 物理网卡名

virtual_router_id 51

priority 101 #

authentication {

auth_type PASS

auth_pass 42

}

virtual_ipaddress {

10.200.16.100

}

track_script {

check_apiserver

}

}

EOF

############## 放在 k8s02 ################

cat>/etc/keepalived/keepalived.conf<<EOF

global_defs {

router_id LVS_DEVEL

}

vrrp_script check_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 3

weight -2

fall 10

rise 2

}

vrrp_instance VI_1 {

state BACKUP #

interface ens192 # 物理网卡名

virtual_router_id 51

priority 100 #

authentication {

auth_type PASS

auth_pass 42

}

virtual_ipaddress {

10.200.16.100

}

track_script {

check_apiserver

}

}

EOF

- keepalived 检测脚本

k8s01和k8s02均需要

cat>/etc/keepalived/check_apiserver.sh<<'EOF'

#!/bin/sh

errorExit() {

echo "*** $*" 1>&2

exit 1

}

APISERVER_VIP=10.200.16.100

APISERVER_DEST_PORT=6443

curl --silent --max-time 2 --insecure https://localhost:${APISERVER_DEST_PORT}/ -o /dev/null || errorExit "Error GET https://localhost:${APISERVER_DEST_PORT}/"

if ip addr | grep -q ${APISERVER_VIP}; then

curl --silent --max-time 2 --insecure https://${APISERVER_VIP}:${APISERVER_DEST_PORT}/ -o /dev/null || errorExit "Error GET https://${APISERVER_VIP}:${APISERVER_DEST_PORT}/"

fi

EOF

chmod a+x /etc/keepalived/check_apiserver.sh

- 启动keepalived和haproxy

systemctl start keepalived haproxy

systemctl enable keepalived haproxy

控制平面节点

拉取组件镜像

k8s01,k8s02,k8s03 执行

鉴于网络问题,所以国内一般无法直接运行初始化命令,因此最好先自行安装好包

# 查看对应版本 k8s 所需包

yum list --showduplicates kubeadm --disableexcludes=kubernetes | grep ${master_verions}

===

kubeadm.x86_64 1.20.8-0 @kubernetes

kubeadm.x86_64 1.20.0-0 kubernetes

kubeadm.x86_64 1.20.1-0 kubernetes

kubeadm.x86_64 1.20.2-0 kubernetes

kubeadm.x86_64 1.20.4-0 kubernetes

kubeadm.x86_64 1.20.5-0 kubernetes

kubeadm.x86_64 1.20.6-0 kubernetes

kubeadm.x86_64 1.20.7-0 kubernetes

kubeadm.x86_64 1.20.8-0 kubernetes

full_version=1.20.8

kubeadm config images list --kubernetes-version=${full_version}

===

k8s.gcr.io/kube-apiserver:v1.20.8

k8s.gcr.io/kube-controller-manager:v1.20.8

k8s.gcr.io/kube-scheduler:v1.20.8

k8s.gcr.io/kube-proxy:v1.20.8

k8s.gcr.io/pause:3.2

k8s.gcr.io/etcd:3.4.13-0

k8s.gcr.io/coredns:1.7.0

根据上面的输出版本,修改下面脚本中 pause etcd coredns 的版本号

cat>images-pull.sh<<EOF

#!/bin/bash

# kubeadm config images list

images=(

kube-apiserver:v${full_version}

kube-controller-manager:v${full_version}

kube-scheduler:v${full_version}

kube-proxy:v${full_version}

pause:3.2 # 修改我

etcd:3.4.13-0 # 修改我

coredns:1.7.0 # 修改我

)

for imageName in \${images[@]};

do

docker pull registry.aliyuncs.com/google_containers/\${imageName}

docker tag registry.aliyuncs.com/google_containers/\${imageName} k8s.gcr.io/\${imageName}

docker rmi registry.aliyuncs.com/google_containers/\${imageName}

done

EOF

bash images-pull.sh

第一个控制平面节点安装(k8s01)

(info: https://kubernetes.io/docs/reference/setup-tools/kubeadm/kubeadm-init/)

采取配置文件安装模式

kubeadm config print init-defaults > kubeadm.yaml.default

配置文件详细参数介绍

根据生成的默认配置,来动态的调整,因为 k8s 版本变更很快,所以下面的配置不一定是正确的

cat > kubeadm.yaml << EOF

# kubeadm.yaml 将默认的配置进行修改

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.8272827282sksksu # 改我,改成你自己随意的字符串

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 10.200.16.101 # 改我,改成第一个控制平面节点的物理ip

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock # 如果是containerd,则改为/run/containerd/containerd.sock

name: k8s01 # 改我,理论上它会自动获取配置所在节点的 hostname

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

controllerManagerExtraArgs:

address: 0.0.0.0 # 开放kube-controller-manager,便于之后prometheus监控

scheduler: {}

schedulerExtraArgs:

address: 0.0.0.0 # 开放kube-scheduller,便于之后prometheus监控

controlPlaneEndpoint: "k8sapi:8443" # 新加我,k8sapi 是 apiserver 的负载均衡器的地址

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

# 默认镜像地址是 k8s.gcr.io,国内基本没法访问。这里改为阿里云的,当然还是建议提前下载好镜像

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v${full_version} # 改我,改成你所需安装的版本

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/16 # 改我,定义 svc 的网段

podSubnet: 10.97.0.0/16 # 改我,定义 pod 的网段

--- # 追加 KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

EOF

--- # 追加 KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd

# 进行初始化安装

kubeadm init --config kubeadm.yaml

init调用配置流程:

配置通过 kubectl 访问集群

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# kubectl 命令补全

source <(kubectl completion bash)

# You should now deploy a pod network to the cluster.

# Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

# https://kubernetes.io/docs/concepts/cluster-administration/addons/

# 添加用户上下文到配置里,方便切换用户

kubectl config set-context admin --cluster=kubernetes --user=kubernetes-admin

调整 kube-controller-manager 和 scheduler 的配置

# 检查状态

kubectl get cs

# 你可能会发现,出现服务连接拒绝问题

controller-manager Unhealthy Get http://127.0.0.1:10252/healthz: dial tcp 127.0.0.1:10252: connect: connection refused

scheduler Unhealthy Get http://127.0.0.1:10251/healthz: dial tcp 127.0.0.1:10251: connect: connection refused

# 原因是这两个服务配置默认端口是0。至于为啥就不晓得了

# 你需要注释掉两个配置里的端口(- --port=0),恢复为默认端口

sed -i '/- --port=0/d' /etc/kubernetes/manifests/kube-scheduler.yaml

sed -i '/- --port=0/d' /etc/kubernetes/manifests/kube-controller-manager.yaml

# 重启 kubelet

systemctl restart kubelet

# 再次检查

kubectl get cs

===

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

kube-controller-manager参数terminated-pod-gc-threshold用于设置保留多少失败的Pod状态,默认是12500。这么高不清楚是否有性能影响或者资源溢出风险。

配置网络插件

网络插件常用的有两种

第一种是 flannel,涉及到更多的 iptables 规则

第二种是 calico,涉及到更多的路由规则

- flannel (本文档选用的插件)

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

# 修改里面的 "Network": "10.244.0.0/16", 变更为你自己的 podSubnet 网段,即kubeadm初始化阶段的 --pod-network-cidr

kubectl apply -f kube-flannel.yml

flannel 将会在每一个节点上创建一个 VTEP 设备flannel.1,并将podSubnet下发给所有的flannel.1

- calico

https://docs.projectcalico.org/getting-started/kubernetes/quickstart 安装文档

kubectl apply -f https://docs.projectcalico.org/manifests/tigera-operator.yaml

wget https://docs.projectcalico.org/manifests/custom-resources.yaml

# custom-resources.yaml 修改里面的网络为 pod 网段

kubectl apply -f custom-resources.yaml

注意,当你使用了 calico 后, 会生成一些 cni 的配置,这些配置会导致你返回 flannel 的时候出现问题。例如无法创建 cni

你可以使用 find / -name ‘*calico*’ 找到所有信息,然后都删除

默认kubeadm安装完后,禁止调度pod到master上。你可以通过下面的命令,关闭所有master节点的禁止调度

kubectl taint nodes --all node-role.kubernetes.io/master-

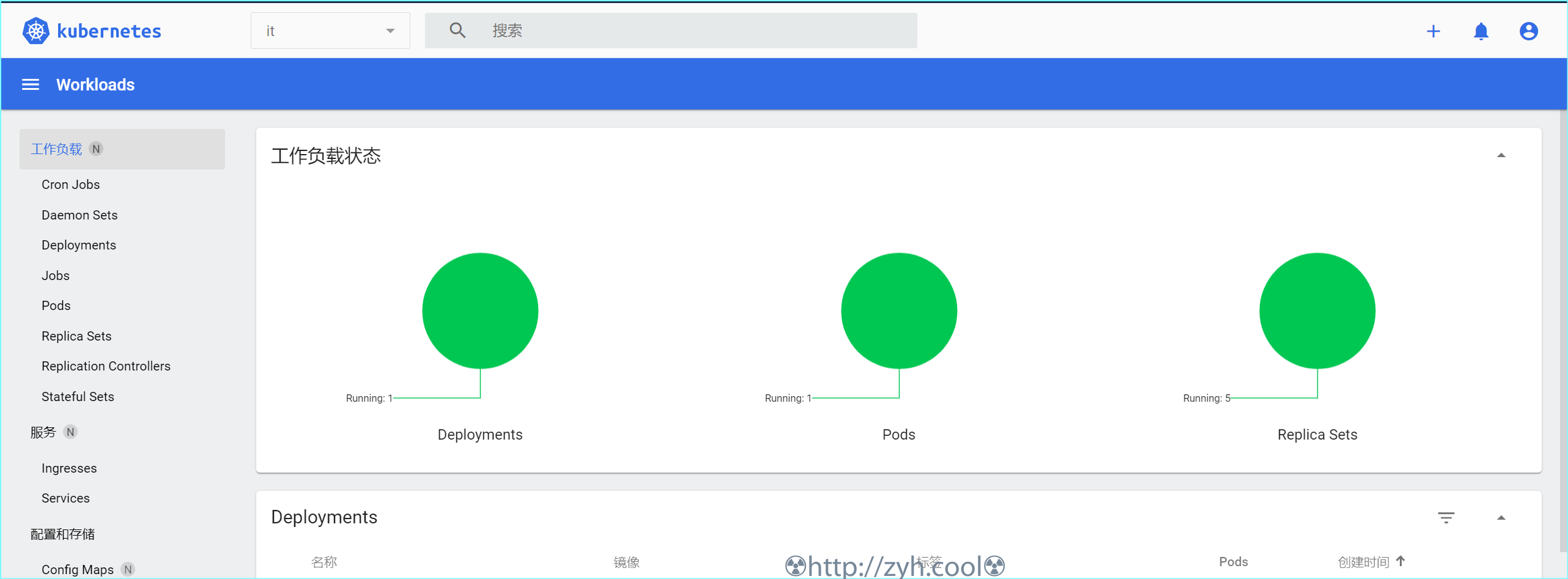

配置web控制台dashboard

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.3/aio/deploy/recommended.yaml && mv recommended.yaml kubernetes-dashboard.yaml

- 如果你部署了 metallb,或者有云服务的 lb,那么只需要修改 kubernetes-dashboard.yaml 配置中的 svc 对象类型为 LoadBalancer

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

type: LoadBalancer # 这里新加一条

selector:

k8s-app: kubernetes-dashboard

之后通过 lb 地址访问即可

- 如果你没有配置 metallb,也没有部署在云服务中(即没有云LB),那么需要修改 svc 类型为 NodePort,并且将 pod 的部署到固定的节点上,例如 k8s01

# 这里我们要修改一些东西

#

#---

spec:

ports:

- port: 443

targetPort: 8443

nodePort: 30001

type: NodePort # 这里新加一条

#---

# 还需要修改一下部署的位置,如果你集群中已经加入了多个节点,则会导致 pod 分发到其它节点上。这里我们强制分发到 master 上. 找到 kind: Deployment 配置,并修改两个 pod 的分发位置为 nodeName: <master 节点主机名>

#---

spec:

nodeName: k8s01 # 这里新加一条

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.0.3

spec:

nodeName: k8s01 # 这里新加一条

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.4

#---

# 然后创建

kubectl create -f kubernetes-dashboard.yaml

之后通过 https://k8s01:30001 访问

- 获取访问web服务的token

dashboard本身是一个pod,如果你想让pod去访问其它的k8s资源,则需要给pod创建一个服务账户(serviceaccount).

构建一个服务账户admin-user,并通过ClusterRoleBinding授权admin级别的ClusterRole对象

# 添加访问 token

# 官方文档: https://github.com/kubernetes/dashboard/blob/master/docs/user/access-control/creating-sample-user.md

# 通过下面内容创建 kube-db-auth.yaml

cat > kube-db-auth.yaml << EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

EOF

kubectl apply -f kube-db-auth.yaml

# 通过下面命令拿到 token

kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk '{print $1}')

获取其他节点加入集群的命令(k8s01)

新加控制平面

# 命令格式

kubeadm join k8sapi:8443 \

--token xxx \

--discovery-token-ca-cert-hash xxx \

--control-plane --certificate-key xxx

新加Node节点

# 命令格式

kubeadm join k8sapi:8443 \

--token xxx \

--discovery-token-ca-cert-hash xxx

加入集群的命令是有24小时有效期,过期之后需要重建命令。

命令重建

添加Node节点命令

# 添加Node节点的命令

add_node_command=`kubeadm token create --print-join-command`

echo ${add_node_command}

添加控制平面节点命令

# 创建 --certificate-key

join_certificate_key=`kubeadm init phase upload-certs --upload-certs|tail -1`

# 命令组合

echo "${add_node_command} --control-plane --certificate-key ${join_certificate_key}"

k8s02和k8s03作为控制平面添加到集群

kubeadm join k8sapi:8443 \

--token h77qhb.cjj8ig4t2v15dncm \

--discovery-token-ca-cert-hash sha256:b7138bb76d19d3d7baf832e46559c400f4fe544d8f0ee81a87f \

--control-plane --certificate-key fd996c7c0c2047c9c10a377f25a332bf4b5b00ca

#

sed -i '/- --port=0/d' /etc/kubernetes/manifests/kube-scheduler.yaml

sed -i '/- --port=0/d' /etc/kubernetes/manifests/kube-controller-manager.yaml

# 重启 kubelet

systemctl restart kubelet

# 根据提示,执行相关命令,一般都是下面的命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 等待一分钟,查看所有 pod 是否正常

kubectl get pod -n kube-system

===

NAME READY STATUS RESTARTS AGE

coredns-74ff55c5b-9gkgk 1/1 Running 0 2d13h

coredns-74ff55c5b-tndb5 1/1 Running 0 15d

etcd-k8s01 1/1 Running 0 15d

etcd-k8s02 1/1 Running 0 7m12s

etcd-k8s03 1/1 Running 0 15d

kube-apiserver-k8s01 1/1 Running 0 26h

kube-apiserver-k8s02 1/1 Running 0 7m11s

kube-apiserver-k8s03 1/1 Running 0 26h

kube-controller-manager-k8s01 1/1 Running 0 15d

kube-controller-manager-k8s02 1/1 Running 0 82s

kube-controller-manager-k8s03 1/1 Running 1 15d

kube-flannel-ds-42hng 1/1 Running 0 15d

kube-flannel-ds-b42qj 1/1 Running 0 15d

kube-flannel-ds-ss8w5 1/1 Running 0 15d

kube-proxy-2xxpn 1/1 Running 0 15d

kube-proxy-pg7j7 1/1 Running 0 15d

kube-proxy-txh2t 1/1 Running 0 15d

kube-scheduler-k8s01 1/1 Running 0 15d

kube-scheduler-k8s02 1/1 Running 0 82s

kube-scheduler-k8s03 1/1 Running 0 15d

问题点:etcd已有节点信息

如果etcd中曾经有k8s02和k8s03的节点信息,则你需要先从etcd中删除,否则加入的时候,会卡在检测etcd处,并最终报错.

删除etcd信息方式:

# 输出 etcd 节点 id

ETCD=`docker ps|grep etcd|grep -v POD|awk '{print $1}'`

docker exec \

-it ${ETCD} \

etcdctl \

--endpoints https://127.0.0.1:2379 \

--cacert /etc/kubernetes/pki/etcd/ca.crt \

--cert /etc/kubernetes/pki/etcd/peer.crt \

--key /etc/kubernetes/pki/etcd/peer.key \

member list

===

2f401672c9a538f1, started, k8s01, https://10.200.16.101:2380, https://10.200.16.101:2379, false

d6d9ca2a70f6638e, started, k8s02, https://10.200.16.102:2380, https://10.200.16.102:2379, false

ee0e9340a5cfb4d7, started, k8s03, https://10.200.16.103:2380, https://10.200.16.103:2379, false

# 假设这里我要删除 k8s03

ETCD=`docker ps|grep etcd|grep -v POD|awk '{print $1}'`

docker exec \

-it ${ETCD} \

etcdctl \

--endpoints https://127.0.0.1:2379 \

--cacert /etc/kubernetes/pki/etcd/ca.crt \

--cert /etc/kubernetes/pki/etcd/peer.crt \

--key /etc/kubernetes/pki/etcd/peer.key \

member remove ee0e9340a5cfb4d7

如果是containerd,命令如下

crictl exec -it <etcd_id> etcdctl --endpoints https://127.0.0.1:2379 --cacert /etc/kubernetes/pki/etcd/ca.crt --cert /etc/kubernetes/pki/etcd/peer.crt --key /etc/kubernetes/pki/etcd/peer.key get --prefix /coreos.com/

Node节点

加入命令,位于 k8s01初始化命令尾部,worker加入的时候,不需要添加 --control-plane --certificate-key

kubeadm join k8sapi:8443 \

--token h77qhb.cjj8ig4t2v15dncm \

--discovery-token-ca-cert-hash sha256:b7138bb76d19d3d7baf832e46559c400f4fe544d8f0ee81a87f

其他内容

容器中显示正确的可见资源

默认情况下,不管你如何设置资源限制,容器里的可见资源都等于节点资源.也就是说你在容器里查看/proc/meminfo显示的资源并不是你设置的.这个问题会带来很多麻烦.

因为很多程序的参数都是根据可见资源来设定的.例如nginx的auto, 或者jvm里的内存参数.

从 Java 8u191开始, jvm 已经默认实现了容器感知( -XX:+UseContainerSupport). 因此无需安装下面的 lxcfs 方案.

并且, 在容器中建议只设置如下内存参数:

-XX:MaxRAMPercentage 最大堆内存=物理内存百分比, 建议为容器限制的50%-75% . 毕竟还需要预留其它内存.

而社区常见的作法是用lxcfs 来提升资源的可见性.lxcfs 是一个开源的FUSE(用户态文件系统)实现来支持LXC容器, 它也可以支持Docker容器.

LXCFS通过用户态文件系统, 在容器中提供下列 procfs 的文件.

/proc/cpuinfo

/proc/diskstats

/proc/meminfo

/proc/stat

/proc/swaps

/proc/uptime

与我们资源限制有关的, 主要是 cpuinfo 和 meminfo.

目前社区的做法如下:

-

所有节点安装 fuse-libs 包.

yum install -y fuse-libs -

集群部署 lxcfs 的 deployment 资源对象

git clone https://github.com/denverdino/lxcfs-admission-webhook.git cd lxcfs-admission-webhook vim deployment/lxcfs-daemonset.yaml === 修正配置里的 apiVersion === 当前git里的代码是陈旧的...代码里的版本在 1.18 已经被废弃). 具体归属于什么版本, 请参考k8s官方api文档 === https://kubernetes.io/docs/reference/generated/kubernetes-api/v1.19/#daemonset-v1-apps apiVersion:apps/v1 kubectl apply -f deployment/lxcfs-daemonset.yaml kubectl get pod #等待 lxcfs pod 状态成 running #如果你发现 pod 始终出错,那么执行下列命令: kubectl delete -f deployment/lxcfs-daemonset.yaml rm -rf /var/lib/lxcfs kubectl apply -f deployment/lxcfs-daemonset.yaml deployment/install.sh kubectl get secrets,pods,svc,mutatingwebhookconfigurations -

给相关namespace注入lxcfs,例如default

kubectl label namespace default lxcfs-admission-webhook=enabled -

重启添加了资源限制的pod, 此时 /proc/cpuinfo 和 /proc/meminfo 将会正确显示.

集群指标监控服务

添加 metrics-server https://github.com/kubernetes-sigs/metrics-server#configuration

wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

# 修改metrics-server容器参数部分,添加额外的启动参数(arg)

args:

- --kubelet-preferred-address-types=InternalIP

- --kubelet-insecure-tls

kubectl apply -f components.yaml

kubectl get --raw "/apis/metrics.k8s.io/v1beta1/nodes"

kubectl top 指令需要指标才能输出

kubectl 命令文档

https://kubernetes.io/docs/reference/generated/kubectl/kubectl-commands

一些常用的命令

- kubectl explain

非常有用的命令,帮助你写配置文件

输出配置某个字段的详细说明,例如 deployment.metadata

其中标注有 -required- 的字段是必选字段

kubectl explain deployment.metadata

- -o yaml --dry-run

输出create命令的yaml配置

kubectl create serviceaccount mysvcaccount -o yaml --dry-run

- 常用的工具容器

kubectl run cirros-$RANDOM -it --rm --restart=Never --image=cirros -- /bin/sh

kubectl run dnstools-$RANDOM -it --rm --restart=Never --image=infoblox/dnstools:latest

kubectl 调用的配置

➜ kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://<api_server>:8443

name: kubernetes-qa

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://<api_server>:6443

name: kubernetes-prod

contexts:

- context:

cluster: kubernetes-prod

user: kubernetes-admin

name: prod

- context:

cluster: kubernetes-qa

user: user-kfktf786bk

name: qa

current-context: qa ## 当前使用的配置

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

- name: user-kfktf786bk

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

删除与清理

# 从集群里删除某个节点

# master exec

kubectl drain <NODE_ID> --delete-local-data --force --ignore-daemonsets

kubectl delete node <NODE_ID>

# worker exec

kubeadm reset

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

ipvsadm --clear;

ip link set cni0 down && ip link delete cni0;

ip link set flannel.1 down && ip link delete flannel.1;

ip link set kube-ipvs0 down && ip link delete kube-ipvs0;

ip link set dummy0 down && ip link delete dummy0;

rm -rf /var/lib/cni/

rm -rf $HOME/.kube;